DATA ENGINEERING SOLUTIONS

Build Enterprise-Scale Pipelines with Qlik's Data Engineering Solutions

Accelerate your data lifecycle with Qlik's data engineering solutions. Architect scalable pipelines, transform data efficiently, and ensure analytics-ready delivery across cloud and on-premises environments.

How do Qlik's data engineering solutions work?

Step 1 - Ingest data from diverse systems seamlessly

Step 2 - Process, cleanse, and transform data efficiently

Step 3 - Orchestrate multi-stage pipelines with automation

Step 4 - Deliver and govern data for analytics access

Why Qlik data engineering solutions?

Enterprise-grade capabilities designed for scalable data engineering

Enterprise-grade governance and data lineage controls

Track data from source to destination with comprehensive lineage visualization, impact analysis, and audit trails that ensure compliance and enable confident troubleshooting.

Scalable across cloud, hybrid, and on-premises deployments

Deploy pipelines wherever your data lives with consistent tooling across AWS, Azure, GCP, on-premises infrastructure, and hybrid architectures without vendor lock-in.

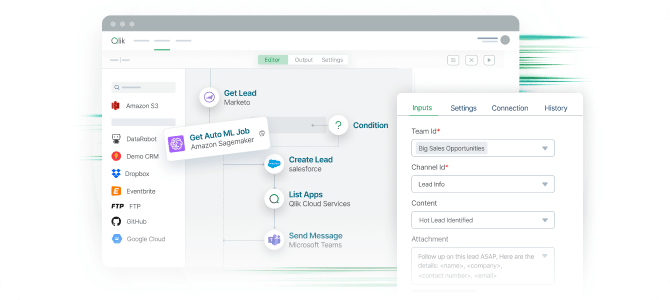

Low-code workflow design for speed and flexibility

Accelerate pipeline development with visual design tools while maintaining the ability to inject custom code for complex transformation logic when needed.

Built for data engineers, architects, and analysts

Serve diverse skill levels with interfaces tailored to each role, from visual builders for analysts to code-first environments for experienced data engineers.

Proven performance in large-scale data workloads

Handle enterprise volumes with optimized execution engines that process terabytes of data efficiently through intelligent parallelization and resource management.

Trusted by leading enterprises worldwide

What our customers say

Connect to 500+ data sources with Qlik’s analytics integrations

Resources to help you succeed with data engineering

Data engineering solutions FAQs

Data engineering software supports 500+ connectors including relational databases, NoSQL stores, cloud applications, streaming platforms, file systems, and APIs, with both pre-built and custom connector options.

We support both processing patterns seamlessly—real-time pipelines use change data capture for continuous ingestion while batch pipelines handle scheduled bulk loads, with the ability to mix both in unified workflows.

Yes, our platform supports both visual low-code development and full code-based pipeline creation, allowing data engineers to choose the approach that matches their requirements and preferences.

We provide built-in data quality rules, validation checks, anomaly detection, and profiling capabilities that monitor data throughout the pipeline with configurable alerts and automated remediation options.