Is the generative AI honeymoon over already? After months of buzz around its transformative possibilities, excitement is now starting to be tampered by a growing concern on trust and data privacy. Just in the last few weeks, there have been several lawsuits launched against AI companies, including a well publicized charge of copyright infringement. Governments across the world are also taking steps to investigate the activities of these companies and bring forth new regulations such as the EU AI Act.

Within our Qlik universe, our customers are telling us that data privacy and security are top of mind for them as they embark on their own generative AI journey. How do you take advantage of the value that AI can offer, while also ensuring that the privacy of your data is maintained, and that misinformation is prevented - and ultimately avoid bad decisions and ramifications for your business? It’s a movie we have seen before, as data privacy and security have been critical to data and analytics initiatives including migration to the cloud.

As you define your own strategy for using generative AI in your organization, the first thing you need to decide is which approach you want to adopt for your large language model (LLM). One preferred approach that we see emerging from our conversations with our customers, as well as activities from our partners Databricks and Snowflake, is the implementation of an enterprise LLM trained on the organization’s proprietary data. Building an enterprise LLM in a safe and compliant way assumes that you are running your model in a secure environment that protects your data and your customers’ data. It also assumes that you choose a foundation model that does not use protected information. And to train this model successfully, you need to make sure that you have good data.

As I wrote in a previous post, generative AI is all about the data; large language models are only as good as the data on which they are trained, so establishing a trusted data foundation with a modern data fabric is an imperative. I spoke to one customer recently that has done exactly this, and the foundation for their LLM is essentially the same data fabric they created with Qlik to support traditional AI and analytics. As you plan your implementation strategy and infrastructure investments for your enterprise LLM, here are 5 essential ways to ensure that your data foundation is secure and ready for generative AI.

1. Smart movement and integration of your data

You have undoubtedly a lot of data in a broad range of formats, from a vast array of sources. For generative AI, this is actually a good thing, as large language models benefit from being trained on large data sets. But in order to enable a seamless and efficient flow of that information to optimize the creation of generated content, you need to be able to identify, gather and move this data into a data warehouse or data lake.

How you trust it: by leveraging a secure point-to-point replication architecture that ensures low data latency and maximum data availability.

2. Continuous update of your data

The delivery of always fresh data enables large language models to adapt, improve, and generate contextually relevant and coherent outputs for a wide array of language-based tasks and applications. That necessitates a data management approach which supports real-time change data capture to continually ingest and replicate data when and where it’s needed.

How you trust it: by streaming real-time data, you optimize the accuracy and relevancy of results your large language model produces.

3. Optimized transformation of your data

For your data to be consumption-ready for your large language model, it needs to be appropriately transformed from its raw state. You need the flexibility to execute these transformations in the most efficient manner based on your target system. For example, push-down SQL is a great fit for a cloud data warehouse whereas a Spark cluster and Spark SQL is more appropriate for a data lake.

How you trust it: by ensuring that data modes and data transformation logic is available and utilized for model tuning to deliver optimal results in generative code generation for training your model.

4. Access to quality data

Data quality is paramount for generative AI as it directly influences the reliability, accuracy, and coherence of the model's outputs. By using high-quality data during training, the model can learn meaningful patterns and associations, ensuring that it generates contextually appropriate and valuable content.

How you trust it: by leveraging solutions that can automatically clean and profile data in real time, so you don’t have to worry about training your model with bad data.

5. Governance of your data

Data governance is vital for generative AI because it ensures the responsible and effective use of data by your large language model. That can be achieved not only through established strategies and policies for data collection, curation, and storage, but also through technology to automate these processes end-to-end for your data pipeline. For example, you may want to automatically protect PII data from model training.

How you trust it: by leveraging catalog and lineage solutions to help automatically find and document any relationships between datasets and validate data accuracy and consistency.

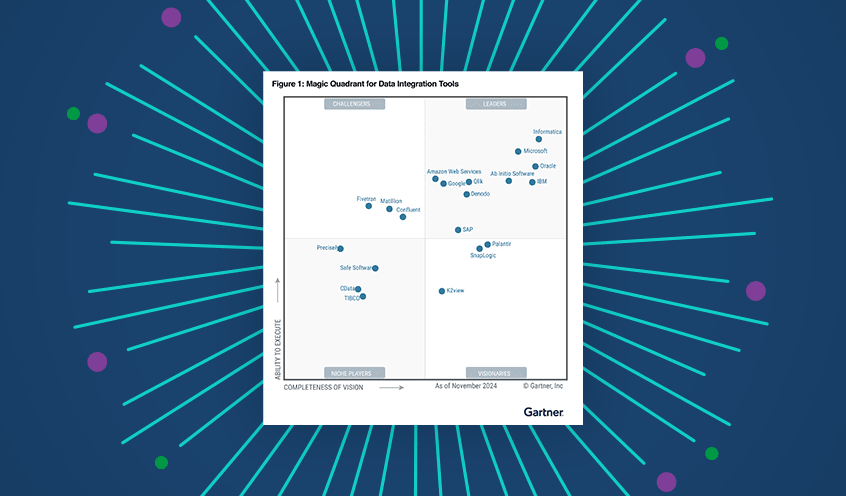

The ability to harness data from any source, enhance quality, and create a secure comprehensive modern data fabric is a must-have to succeed with generative AI (and pretty much anything else you do with your data). To learn more about the art of the possible with Qlik and Talend’s data integration and quality solutions, watch this webinar.